Like the certainty of death and taxes, systems will go down. It is not a question of if, but of when. Unlike death which might last a while, systems can't stay down and the name of the game is to bring them back as quickly as possible and limit the impact on customers and the bottom line of an organisation.

System resilience is the ability of a system to withstand major disruption within acceptable degradation parameters and to recover within an acceptable time.

Let's make this more vivid. A couple of years ago, before the time we had scalable cloud infrastructure Black Friday was a major issue (it actually still is an issue). Processing loads for payments go up tenfold on this day and only the very large organisations had the spare capacity to handle this. On this fateful day, a couple of years ago one of the banks in South Africa payment capability started failing. To protect the overall payment system in the country it is the norm to switch processing over to another banks. Contracts between banks are in place for these eventualities. The payment processing failure of this particular bank then created a domino effect as the other bank started to fail as it had to pick up the extra processing. One has to remember the bank still had to process it's own Black Friday load and now also all the payments from the other bank. Now we have two banks that struggled.

The irony is that customers thought the online stores were having problems and this caused reputational damage for them. Customers didn't realise it was the payment capability of the banks that were failing.

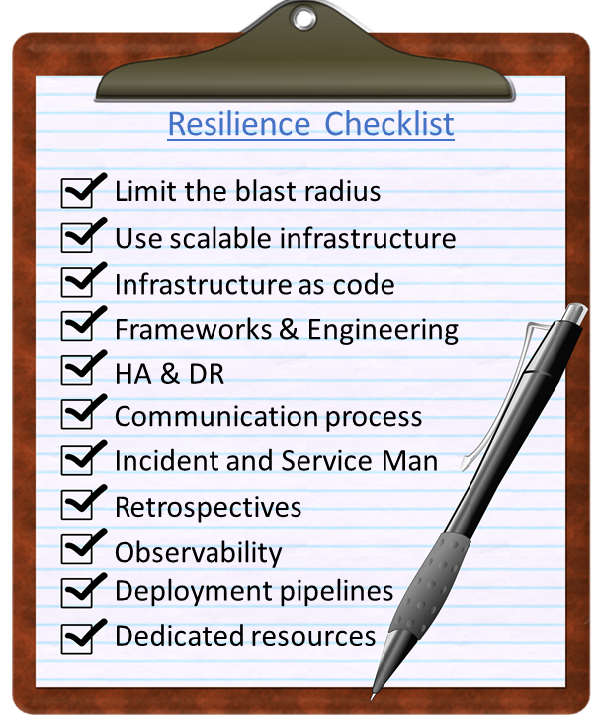

So as a responsible CIO or IT Manager, how does one protect against failure like this? Here is a checklist that would serve as a first step towards general system resilience. Check off to see how many of these you have in place:

-

Limit the blast radius: When a system goes down, does it bring down other systems in the ecosystem? Use patterns like circuit breakers, loose coupling and graceful degradation are master strokes that keep the impact contained.

-

Use scalable infrastructure: When you have a spike in demand can your turn on more capacity seamlessly? This is where using the Cloud is a master stroke.

-

Infrastructure as code: Is your infrastructure generated by code and fully automated? Finger trouble and configuration drift between different environments are major culprits causing system failure? Using containers is a master stroke when it comes to infrastructure as code. Scripting is a start, but in no way the final goal.

-

Observability: Do you monitor your business processes? See failure before it hits you. Observability lets you understand a system from the outside, by letting you ask questions, without knowing the inner workings of that system.The master stroke here is to observe your critical business processes, rather than just application states. Your practice of observability gets strengthened by promoting the use of OpenTelemetry(OTel), Observability-Driven Development (ODD) and defining a Observability stack for teams across the organisation to use.

-

HA & DR: Can you switch over to HA / DR seamlessly and quickly? When last did you test your DR comprehensively? High Availability and Disaster Recovery have been with us since the dawn of IT (or almost). These are necessary and baseline practices, but in today's day and age, they are not sufficient. The master stroke here is to test frequently.

-

Communication Process: Do your customer know from you that a system is down rather than experiencing it? In the current digital era, everything is about the customer. Instead of just communicating system failure or outage to your recovery teams, how are you meaningfully engaging with your customers around outages? The master stroke here is using social media to meaningfully engage with all your customer.

-

Incident and Service Management: When last did you re-look your Incident and Service Management processes? The stalwarts of resilience are these two practices. Detect, respond, alert, communicated and manage.

-

Retrospectives: Do you learn from failure? Is it part of your DNA? Here master stroke is the blameless post-mortem. Nothing is as morale breaking and detrimental to resilience as nailing people for non-willful acts than bring systems down. If you penalise people for owning up to failure, they will never admit or revela it again and system restore time will increase exponentially.

-

Learn from frameworks and engineering practices: Glean the best from frameworks that have been around for a long time, e.g. Cobit, ITIL, CERT Resilience Management Model etc. Again, this is necessary but not sufficient. The master stroke here is bolstering these methodologies with modern techniques like System Reliability Engineering (SRE) and modern engineering practices.

-

Deployment pipelines: Do you have automated pipelines in place that move your code seamlessly from laptop server/cloud? Does it include automated testing, source code control, security and compliance checks?

-

Dedicated resources: Do you have dedicated resources that eat, drink and sleep system reliability. Do they have the experience, tools and freedom to operate across the silos in your organisation?

The more of the checklist you tick, the fewer outages you will have and even more importantly the quicker you will be able to detect the problem, limit the impact and bring your system up quicker. Make these capabilities habits and high system uptimes will become a feature of your systems.

Further reading

Firesmith, Donald. "System Resilience Series", Software Engineering Institute Blog, Carnegie Mellon University, 2019 -

Back to the Table of Contents of our Techno Fluency book